1.2.1. Ethical Trustworthiness

Ethical Trustworthiness¶

Ethical Trustworthiness: Aligning AI with Human Values¶

As introduced in Section 1.2, trustworthiness must be structurally embedded into AI systems, not assumed. Among its pillars, ethical trustworthiness ensures that AI systems promote fairness(1), avoid harm, and align with societal values.

- The principle that AI systems should produce outcomes that are impartial and just across different population groups, avoiding unjustified or discriminatory bias.

(ISO/IEC 22989:2022)

Ethical trustworthiness is essential to positively transforming the impact of AI on humans and society. It ensures that technological innovations go beyond mere efficiency and are implemented in a fair and responsible manner. In order for an AI system to be trusted by people, it must meet ethical standards making fairness a key determinant in the success of technological adoption.

These principles are not theoretical—they play out in real-world decisions. A widely cited case involving Apple and Goldman Sachs illustrates how ethical gaps in AI design can lead to unequal treatment, public backlash, and mistrust.

Apple and Goldman Sachs claimed gender wasn’t a factor in their credit limit algorithm, but the results told another story. Even among couples with identical financial profiles, men consistently received higher credit limits than women. David Hanson reported a 20× limit difference between himself and his wife. Apple co-founder Steve Wozniak shared a similar experience. Figure 6 symbolically represents the risks of algorithmic discrimination in opaque systems.

A symbolic depiction of algorithmic bias in credit limit decisions based on the 2019 Apple Card case

[Illustrative AI-generated image]

AI models trained on historical data can absorb and reproduce existing inequalities, especially if fairness is not proactively enforced. For example, if historical credit data reflects gender-based bias, an AI system may reproduce those patterns, even without explicitly using gender as a variable.

To prevent this, fairness techniques must be applied at multiple phases of the AI lifecycle:

-

During data preparation:

- Apply rebalancing to ensure equitable representation

- Use disparate impact analysis to detect group-level disparities

-

During model design:

- Use counterfactual fairness testing to evaluate if outcomes change when only sensitive attributes (like gender) are altered

- Integrate fairness-aware constraints or regularization to mitigate systemic bias

These design-stage safeguards highlight a deeper truth: ethics must be embedded, not retrofitted.

Quote

“Unchecked bias in data leads to systematic harm, regardless of intent.”

“Unchecked bias in data leads to systematic harm, regardless of intent.”According to ISO/IEC 24028 and 23894, building ethically trustworthy AI requires identifying and mitigating risks during data collection, preprocessing, and validation—not just in model outputs. Bias detection must be proactive, repeatable, and auditable.

Beyond the development stage, continuous monitoring after deployment is equally essential. Tools and processes include:

- Adversarial testing: simulating edge cases to expose hidden bias

- Drift detection: tracking fairness degradation over time in live settings

For example, if women systematically receive lower credit limits than men despite similar financial records, this should trigger a formal investigation and system recalibration. These techniques ensure that fairness is not a one-time design feature but a continuous operational requirement.

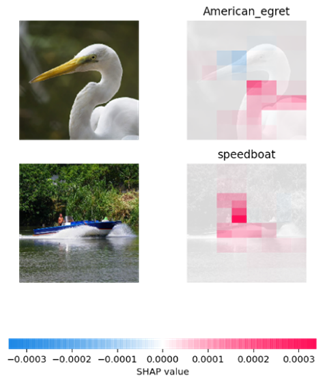

To support this effort, explainability tools like SHAP (SHapley Additive exPlanations) help clarify how AI decisions are made. SHAP identifies which input features contribute most to an outcome—visualized in Figure 7.

(Source)

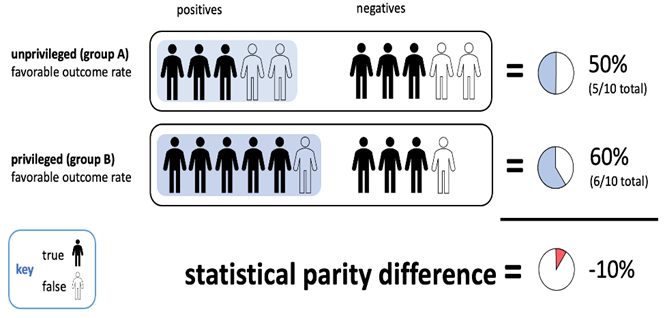

Complementing this, Statistical Parity (Figure 8) checks whether decision outcomes are equitably distributed across groups. It highlights potential disparities by comparing selection rates among different demographics.

(Source)

Ethical trustworthiness means more than meeting compliance requirements. It demands that AI systems actively prevent discrimination, promote equal treatment, and foster public confidence. These values must be integrated across the entire lifecycle—from data collection and model design to real-world deployment and oversight.

Ultimately, ethical trustworthiness is a cornerstone of trustworthy AI. It ensures that AI systems are not only accurate and efficient but also accountable to human values, and resilient against social harm. Fairness is not just a feature—it’s a requirement for adoption, acceptance, and legitimacy.