1.3.3. 7 requirements of the EU AI Act

7 requirements of the EU AI Act¶

The EU AI Act: A Global Turning Point for Trustworthy AI¶

In April 2021, the European Union proposed the EU AI Act, the world's first comprehensive AI legislation designed to ensure that AI technologies are developed and deployed in a safe, trustworthy, and human-centered manner. The Act introduces a clear regulatory framework across the AI lifecycle, spanning design, development, deployment, and use, while placing special focus on high-risk AI systems.

The EU AI Act received global attention for its ability to manage AI risks at scale and to codify ethical, legal, and technical standards into enforceable law. It has redefined the international conversation around AI governance, shifting it from voluntary guidelines to lifecycle-based compliance.

Key Requirements of the EU AI Act¶

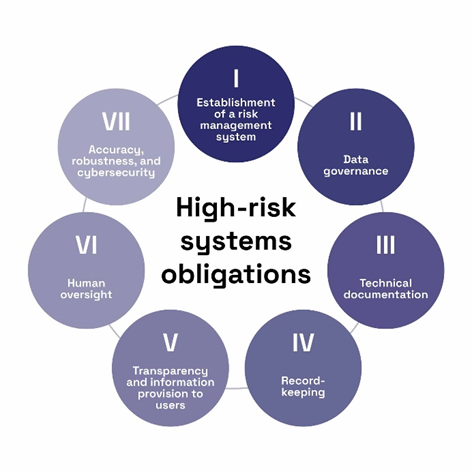

As illustrated in Figure 10, the seven core requirements outlined in the Act serve as baseline criteria for all high-risk AI systems. They ensure that AI technologies operate in ways that are fair, secure, explainable, and accountable and they apply across the entire system lifecycle.

Table 3: EU AI Act – Seven Key Requirements

| Article | Principle | Requirement Description |

|---|---|---|

| Article 9 | Risk Management System | Identify and mitigate potential risks at every development stage through systematic planning. |

| Article 10 | Data and Data Governance | Use high-quality, representative, and bias-mitigated data for training and evaluation. |

| Article 11 | Technical Documentation | Maintain detailed records of system purpose, limitations, and performance specifications. |

| Article 12 | Record-Keeping | Log operational data to support traceability, auditing, and accountability. |

| Article 13 | Transparency and User Information | Clearly inform users of how the system works and how it should be used safely. |

| Article 14 | Human Oversight | Ensure that meaningful human control is always possible—AI should support, not replace, human judgment. |

| Article 15 | Accuracy, Robustness, and Cybersecurity | Build resilience into systems to prevent failures, malfunctions, and external threats. |

Source: European Commission (2024)

These seven requirements are not just regulatory checklists—they form the core of modern AI trust governance, providing both ethical direction and technical structure for high-risk systems.

Korea's AI Basic Act: Regional Alignment with Global Governance¶

Building on this momentum, South Korea enacted its own AI Basic Act in December 2024, which will take effect in January 20262. The law is one of the first in Asia to comprehensively address AI ethics, safety, and rights-based design through a legally binding framework.

Key features of Korea’s AI Basic Act include:

- A risk-based classification of high-impact AI systems

- Mandatory risk assessments, human oversight, and transparent decision-making

- User notification when AI affects individual rights

- Enforcement mechanisms, including administrative fines up to KRW 30 million (~$20,870)

Korea’s legislation aligns with the EU model but also emphasizes human-centered innovation and responsible growth, reinforcing the country’s position as a regional leader in trustworthy AI governance.

How the EU AI Act Drives Trust¶

Each requirement within the EU AI Act is designed to enhance the trustworthiness of AI by embedding safety, fairness, and transparency into system design and operation.

- For instance, rules around data bias and fairness ensure that AI systems do not discriminate against particular groups.

- Post-deployment monitoring and reactive accountability ensure that problems can be detected and corrected, not just prevented.

- Perhaps most critically, the human oversight provision protects against unchecked automation by ensuring that humans remain decision-makers in high-impact contexts.

Together, these elements foster public trust, regulatory clarity, and system resilience.

Thinkbox

“The EU AI Act reverses the regulatory default: from ‘allowed until restricted’ to ‘restricted until proven trustworthy.’”

“The EU AI Act reverses the regulatory default: from ‘allowed until restricted’ to ‘restricted until proven trustworthy.’”Under this risk-based framework, high-risk AI systems must demonstrate compliance before entering the market—through pre-deployment assessments, documentation, and human oversight (Article 43, EU AI Act).

This shift moves AI development from a reactive to a preventive model, making trustworthiness a prerequisite, not an afterthought.

Global Ripple Effects: The EU AI Act's Influence¶

The EU AI Act is not only transforming European regulation—it is reshaping global AI policy discussions. Since its release, other countries have taken similar steps:

-

United States: In 2022, the White House published the AI Bill of Rights—a non-binding but influential set of guidelines addressing data bias, privacy, and algorithmic discrimination.

-

China: China’s Cyberspace Administration 1 launched rules for algorithm transparency and data governance in 2023.

- South Korea: As noted above, the AI Basic Act addresses accountability and risk, while also focusing on human dignity, safety, and innovation, making it one of Asia’s most forward-looking policies.

These regulatory shifts show that trustworthiness is now a competitive and collaborative priority in AI development across nations.

How Industry is Responding¶

Major technology companies and smaller enterprises are responding to the rising demand for trustworthy AI by adapting their development and documentation practices:

-

Google and Microsoft have adopted formal AI ethics charters, such as Microsoft's Responsible AI Principles, that echo the structure of the EU AI Act: transparency, human review, and secure design.

-

SMEs are increasingly turning to cloud-based compliance platforms to manage documentation, version control, and risk logs required under new laws. These platforms reduce the compliance burden while still meeting core regulatory needs.

The Act as a Practical Standard for Trust¶

The EU AI Act has become more than regulation—it is a practical reference standard. It helps:

- Companies structure their compliance and gain market trust

- Governments align safety with innovation

- Researchers benchmark AI development against ethical and technical norms

So far in Chapter 1, we’ve explored the ethical, legal, and technical pillars of AI trustworthiness. The EU AI Act and its global impact have shown that trust can be systematically embedded into AI—not just through values, but through standards, oversight, and real-world enforcement.

But trust cannot be bolted on at the end. It must be integrated at every stage of the AI lifecycle, from initial design to long-term monitoring.

In the next section, We introduce the AI development lifecycle: a step-by-step structure that allows developers to embed ethical, legal, and technical trustworthiness from day one.

TRUST Talks

Student: "BillyBot, why does trustworthiness need to be applied at every stage of the AI lifecycle?"

Student: "BillyBot, why does trustworthiness need to be applied at every stage of the AI lifecycle?"BillyBot: "Because trust isn’t built in one go—it’s nurtured step by step. Each phase, from planning to deployment, plays a role in ensuring the AI is trustworthy."

Student: "Ah, like in the COMPAS case! If fairness had been considered in planning, those biases could’ve been avoided."

BillyBot: "Exactly. A strong foundation in planning prevents costly fixes and trust issues later."

Bibliography¶

-

Cyberspace Administration of China. (2023). Provisions on the Administration of Deep Synthesis Internet Information Services. http://www.cac.gov.cn/ ↩

-

Ministry of Science and ICT. (2026). AI Basic Act. https://www.msit.go.kr/eng/bbs/view.do?sCode=eng&mId=4&mPid=2&bbsSeqNo=42&nttSeqNo=1071&searchOpt=ALL ↩