1.5.2. Case 2 Recruitment algorithms and ethical trustworthiness

Case 2 Recruitment algorithms and ethical trustworthiness¶

Case 2 : Bias and Ethical Failure in Amazon’s Hiring Algorithm¶

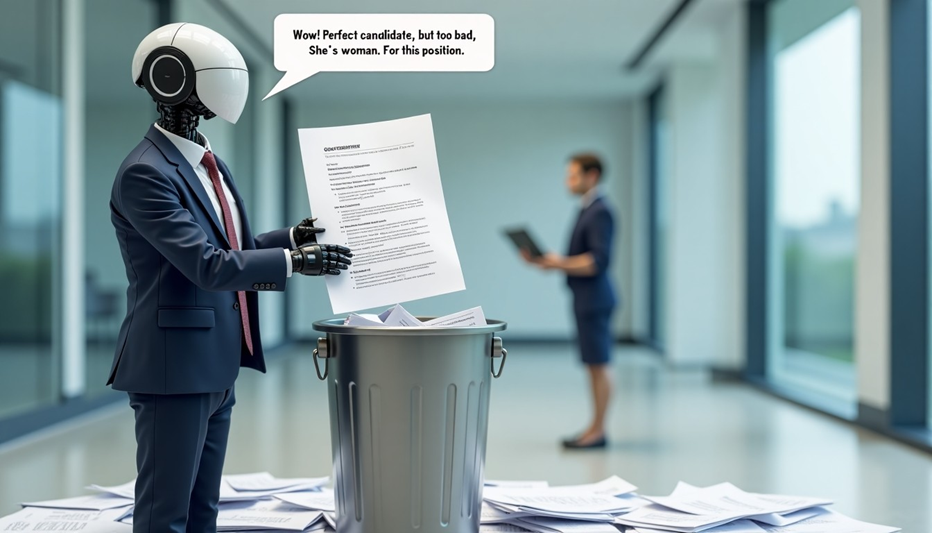

Amazon’s resume screening algorithm began reflecting a disturbing pattern: penalizing female applicants simply for being women. Between 2014 and 2017, the company experimented with AI to automate its hiring pipeline for technical roles. However, the model trained on a decade of internal resumes, absorbed historical biases that favored male applicants. As a result, resumes that included terms like “women’s chess club” or “women’s coding group” were downgraded. This real-world example illustrates how ethical trustworthiness can break down in AI systems, as shown in Figure 12.

Case Study 007: Amazon’s Resume Screening AI (2014–2017)

Location: United States | Theme: Bias in Automated Decision-Making

🧾 Overview

Between 2014 and 2017, Amazon developed an internal AI tool to evaluate and rank job applicants for technical roles based on résumé analysis. The model was trained using a dataset of résumés submitted over a 10-year period, primarily from previous successful hires at the company. The system aimed to reduce time spent on manual screening.

🚧 Challenges

The system downgraded résumés containing terms like “women’s,” reflecting gender bias learned from historical patterns. No pre-deployment fairness audit was performed.

💥 Impact

The tool potentially excluded qualified applicants based on gendered language. The issue raised concerns over bias in automated HR systems.

🛠️ Action

Amazon discontinued the tool internally. No external announcement, investigation, or reparative measures were taken.

🎯 Results

The case influenced updates in domestic AI ethics discussions and called attention to the need for stricter data governance protocols.

How Biased Hiring Algorithms Undermine Trustworthiness¶

Amazon’s AI system didn’t deliberately discriminate, but it replicated patterns from biased historical data—assigning lower scores to resumes associated with women. An algorithm optimized for efficiency became a tool for reinforcing systemic inequality.

This case highlights the ethical failure of deploying AI systems without proper fairness checks. Such bias can reduce workplace diversity, deepen structural discrimination, and ultimately erode trust in both the company and the technology.

Approaches to Promoting Diversity and Equity¶

Following this incident, Amazon ceased using the tool and initiated internal reviews to improve data and design practices. Remediation strategies included:

- Removing sensitive demographic markers (e.g., gender, race) from training data

- Expanding datasets to reflect greater diversity

- Involving external stakeholders and ethics experts in review processes

Beyond internal reform, new regulatory efforts emerged. For example, New York City passed a law in 2023 requiring bias audits for AI-driven hiring systems2. This marked one of the first city-level attempts to embed ethical trustworthiness into algorithmic design by law—not just intention.

Lessons for Ethical AI Design¶

Hiring algorithms offer efficiency gains, but without fairness safeguards, they risk deepening inequality. Ethical trustworthiness isn’t just a technical add-on, it is central to the public legitimacy of AI systems. Fair hiring practices, explainable models, and accountability mechanisms are not just “nice to have”, they’re essential for trust.

Bibliography¶

-

Dastin, J. (2018, October 10). Amazon scrapped 'sexist AI' recruiting tool. Reuters. https://www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G ↩

-

New York City Council. (2021). Local Law 144: Automated Employment Decision Tools Audit Law. https://www.nyc.gov/assets/dca/downloads/pdf/about/Local-Law-144-Automated-Employment-Decision-Tools.pdf ↩