1.5.3. Case Study 3 Healthcare AI and stable Trustworthiness

Case Study 3 Healthcare AI and stable Trustworthiness¶

Case 3: Healthcare AI Failure and the Cost of Instability¶

“The AI told her it was okay to go home, but she didn’t get home.”

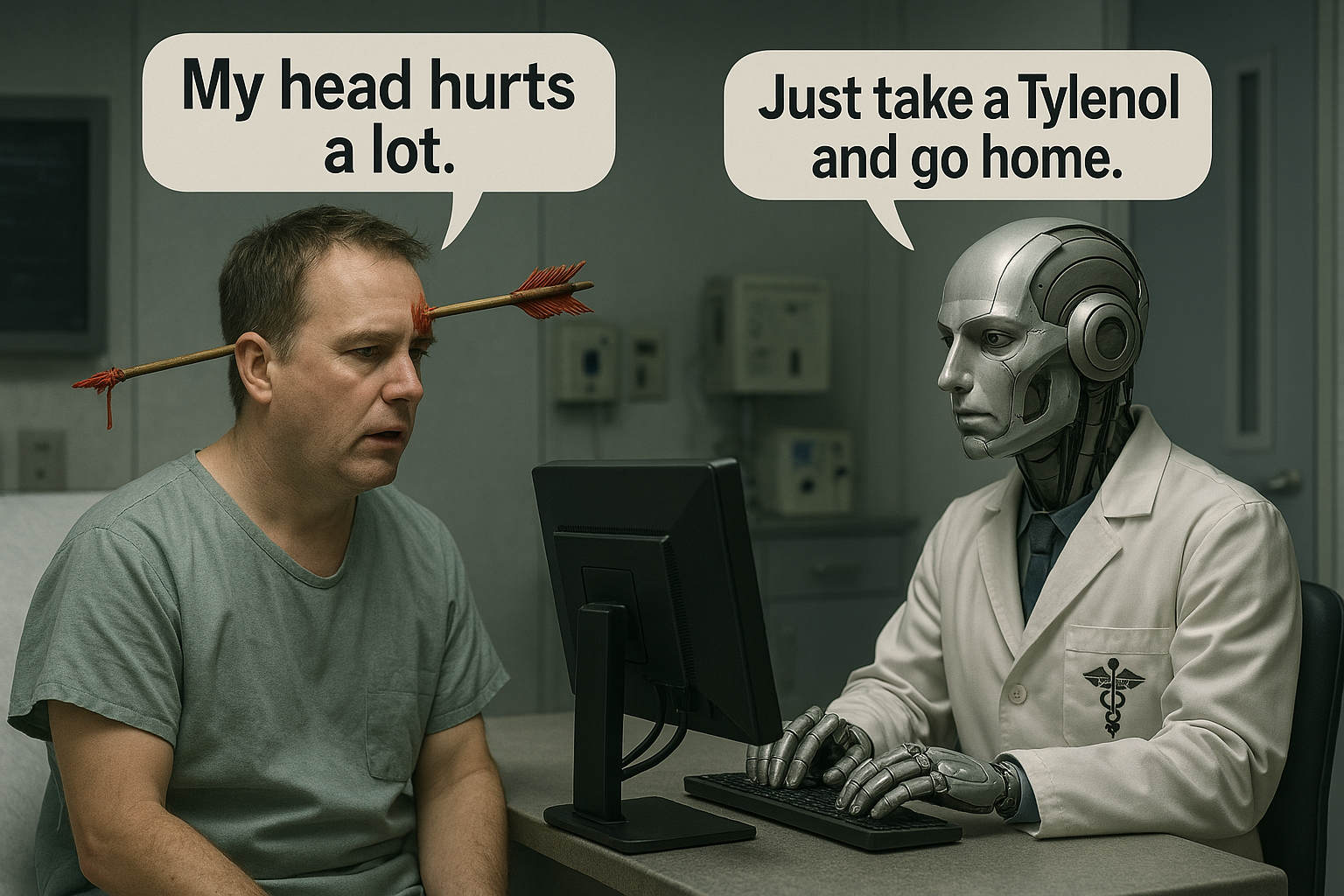

This heartbreaking statement refers to a 2020 case in the UK, where a hospital-deployed AI missed early signs of breast cancer. The patient trusted the AI's diagnosis and didn’t pursue further testing—ultimately missing a critical treatment window. As illustrated in Figure 13, this failure reflects the devastating impact of poor system stability and insufficient human oversight in medical AI.

Why Stability in Healthcare AI Is Non-Negotiable¶

The misdiagnosis occurred because the AI system had been trained on non-diverse data—mostly from white women. As a result, it failed to recognize breast cancer symptoms in minority patients. This highlights a broader risk: AI systems that lack representative training data often perform poorly for underrepresented groups.

Stability in healthcare doesn’t mean performance under ideal conditions, it means reliable and safe performance across real-world, diverse populations. A lack of this kind of stability doesn’t just reduce accuracy—it risks lives.

How the Sector Is Responding¶

To improve safety and fairness, healthcare AI developers and institutions are implementing several technical and procedural solutions:

-

Representative data training:

Google Health’s breast cancer model now integrates multinational datasets to reduce demographic bias and increase clinical relevance. -

Mandatory human oversight:

In Japan, AI-generated diagnostic results are always verified by human doctors. This double-check system adds a vital layer of protection and reinforces accountability. -

Stress testing for robustness:

U.S. research institutes simulate unpredictable inputs to evaluate how AI systems handle atypical cases—such as noise, missing data, or rare symptoms.

Together, these efforts show that stability requires more than algorithmic performance. It demands ongoing validation, domain-specific resilience, and regulatory alignment.

When AI supports medicine, every decision must be safe, fair, and explainable. Without systemic safeguards, AI can become a silent risk rather than a helpful ally.

AI Stability and Fairness Reflection

Scenario: A banking institution deploys an AI-powered loan approval system to improve efficiency. After launch, customers report unexplained rejections. A regulatory audit reveals the model disproportionately denies loans to certain demographic groups, raising allegations of bias.

Scenario: A banking institution deploys an AI-powered loan approval system to improve efficiency. After launch, customers report unexplained rejections. A regulatory audit reveals the model disproportionately denies loans to certain demographic groups, raising allegations of bias.Task:

1. Identify three root causes of the trust failure in this AI system.

2. Propose two technical or procedural solutions to improve trustworthiness and performance in future decisions.

Instructions:

- Base your response on the principles of trustworthy AI (ethical, legal, and stable).

- Use bullet points to clearly present your findings.