3.1.1. Systemic Risks - Is Failure Predictable in AI?

Systemic Risks - Is Failure Predictable in AI¶

“What if the greatest risk in AI isn’t what we see, but what we never thought to test?”

In the last section, we explored how high-performing models can still fail catastrophically. These failures are not always caused by coding errors or engineering oversight, they often emerge from something deeper: a systemic blind spot in how we understand risk.

When AI performs well in tests but fails in deployment, it reveals more than a performance gap, it exposes a systemic risk gap.

Why AI Risk Is Structural, Not Sporadic¶

Unlike traditional software, AI systems are trained, not fully programmed. That means their behavior depends on training data, deployment context, and design assumptions, many of which are invisible or incomplete. When failure happens, it often happens everywhere, not just once.

(1) Systemic risks are embedded in model architecture, data pipelines, and deployment decisions. They are not accidents, they are design oversights.

- A risk that arises from the overall structure or operation of a system, where a failure in one part can cascade and cause widespread impact 1.

Examples include:

- Training on datasets that exclude or misrepresent key groups

- Optimizing for average accuracy instead of edge-case resilience

- Relying on test sets that don’t reflect deployment environments

- Skipping fairness audits

These risks aren’t bugs in the code, they’re symptoms of how we build AI without anticipating where failure matters most.

To shift from reactive fixes to proactive prevention, we need governance models that turn abstract risk into operational control. The GDPR explicitly mandates fairness and transparency, especially where algorithmic processing affects individual rights, while ISO/IEC 23894 reinforces these obligations at the system-design level.

That’s where ISO 31000 comes in. Originally developed for enterprise and safety-critical systems, it offers a universal scaffold for risk-based thinking, one that is increasingly being extended to AI, especially when paired with AI-specific guidance from ISO/IEC 23894.

Note

GDPR Article 5 outlines the principle of fairness and transparency, requiring that data be “processed lawfully, fairly and in a transparent manner.” This applies to training datasets, audit procedures, and model outputs in AI systems that process personal or demographic information.

ISO 31000: Risk as a Design Discipline¶

Traditional safety engineering relies on deterministic systems. But AI systems are dynamic, data-driven, and probabilistic. To manage this new category of risk, we need a framework that’s just as adaptive, and that’s where ISO 31000 proves invaluable.

ISO 31000 offers a more dynamic lens: risk is not just detected, it must be designed for.

While ISO 31000 provides the backbone for generic risk management, ISO/IEC 23894 adapts those principles to the specific risks of machine learning systems, such as data distribution shift, (1) model opacity, and feedback loops. Together, they form a two-layer governance structure: 31000 guides the overall process, while 23894 ensures the risks unique to AI are not overlooked.

- The degree to which the internal workings or decisions of a model are not interpretable. (Source: IEEE P7001 - Transparency Standard)

Key Principles ISO 31000 Brings to AI Safety¶

Table 9: ISO 31000 Principles Applied to AI Safety

| Principle | Application to AI |

|---|---|

| Systemic Risk Thinking | Encourages broad analysis, data, users, context, not just code and model performance |

| Lifecycle Risk Integration | Requires risk tracking from model design through deployment and post-market feedback |

| Stakeholder-Centered Risk | Considers who is harmed, how harm is distributed, and fairness of outcomes |

| Risk Appetite & Thresholds | Helps define tolerable risk levels, especially in edge-case or bias-prone scenarios |

This shifts the question from “Did the model pass the benchmark?” to “Are we managing the full spectrum of risk, now and later?”

Applying the First Two Risk Phases¶

Table 10: ISO 31000 Risk Management Phases in AI

Here's how the first two phases of ISO 31000 support responsible AI development:

| Phase | Key Activities | AI Context |

|---|---|---|

| 1. Establish Context | Define organizational goals, legal environment, and stakeholder needs | Is the model used in high-stakes settings like healthcare or hiring? Who is affected? |

| 2. Identify Risks | Surface failure modes systematically | Will the AI reinforce systemic bias? Is the data misaligned with real-world diversity? |

These two steps alone often expose risks benchmarks miss, not because the model is wrong, but because the context is missing.

“AI doesn’t just fail from technical errors. It fails from unexamined assumptions.”

ISO 31000 turns this insight into methodology: it brings (1) structured foresight to a field often dominated by hindsight.

- A formal process of anticipating future risks and uncertainties through scenario analysis or other predictive methods. (Source: OECD Foresight Manual, 2010)

Why Structured Risk Quantification Matters¶

One of the most common failure points in AI deployment is risk invisibility, where known or unknown risks aren’t prioritized because they haven’t been quantified. That’s why ISO 31000 emphasizes structured risk scoring. It helps teams move from intuition to evidence, and from awareness to action.

How the Risk Matrix Works¶

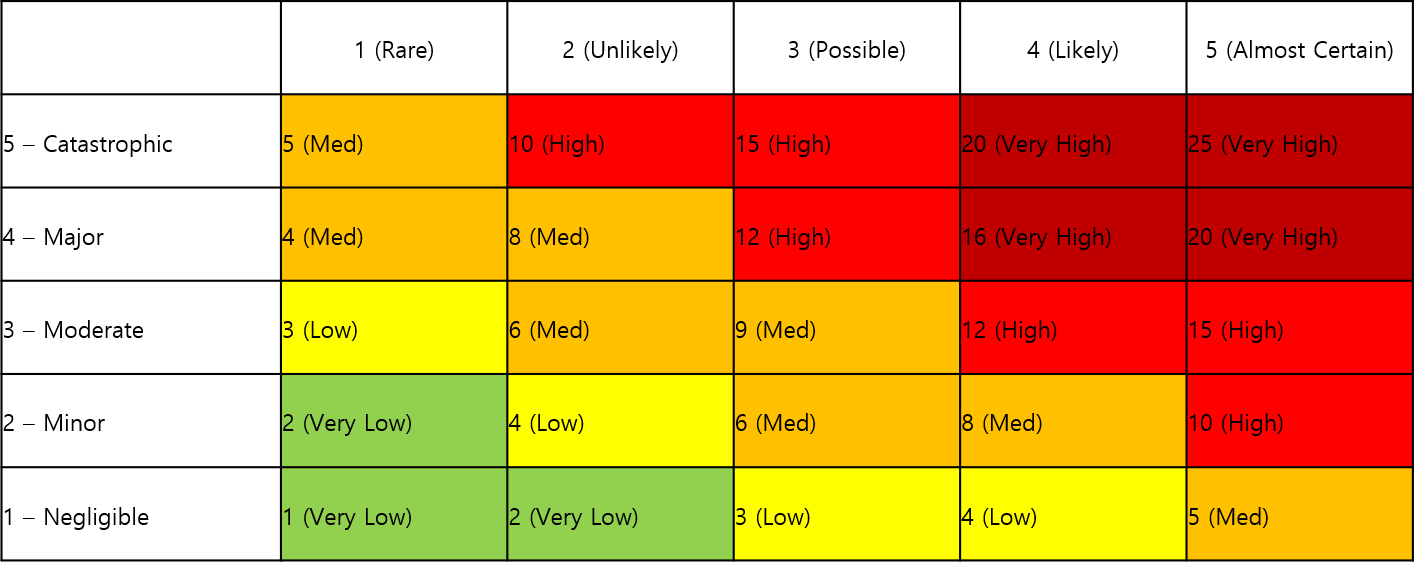

To prioritize risks, ISO 31000 introduces a powerful tool: the risk matrix, which scores issues along two dimensions:

Table 11: Risk Matrix Dimensions and Categories

| Component | Description |

|---|---|

| Likelihood (L) | How likely is the failure in real-world use? Scored from 1 (rare) to 5 (likely) |

| Impact (I) | How severe are the consequences? Scored from 1 (negligible) to 5 (catastrophic) |

The product of these scores (L × I) categorizes the risk:

Table 12: Risk Level Interpretation

| Score Range | Risk Level |

|---|---|

| 1–3 | Very Low |

| 4–6 | Low |

| 7–12 | Medium |

| 13–19 | High |

| 20–25 | Very High |

These scores populate a (1) Risk Register, helping teams decide which risks demand immediate mitigation, additional testing, or redesign before deployment.

- A documented record of identified risks, including their characteristics, assessment, and actions taken. (Source: ISO 31000:2018)

The matrix (figure 22) below visualizes how different combinations of likelihood and impact produce different levels of risk. Each cell represents a possible score from multiplying the two dimensions (L × I), ranging from 1 (Rare × Negligible) to 25 (Almost Certain × Catastrophic).

This structured layout helps teams quickly identify which risks fall into “Very High” (requiring immediate action), “Medium” (monitor and possibly mitigate), or “Very Low” (acceptable under normal circumstances).

In the next section, we’ll apply this matrix to a real-world AI fragility case and show how it could have been flagged earlier through structured risk practices.

Bibliography¶

-

International Organization for Standardization. (2018). ISO 31000:2018 — Risk management — Guidelines. ISO. ↩