4.1. When Flawed Data Shapes Intelligent Decisions

When Flawed Data Shapes Intelligent Decisions¶

Large language models continue to impress with their linguistic fluency and problem-solving power. They can summarize dense medical papers, generate source code, or mimic creative writing styles in seconds. Yet behind these feats lies an uncomfortable truth: the same model that dazzles with brilliance can also confidently output plagiarized material, fabricate harmful quotes, or surface private user conversations scraped from the web1.

These failures are not simply bugs in a complex system. They are symptoms of deeper failures in data governance, failures that begin with how data is collected, curated, and justified. We often lack visibility into what our models are trained on. And more worryingly, we lack understanding of whom that data represents, or excludes.

This is not merely a technical limitation. It is a profound ethical concern.

What does it mean to build intelligent systems when the foundation is untraceable?

What risks emerge when AI learns from data we never agreed to share?

Case in Focus: The New York Times vs. OpenAI¶

In December 2023, The New York Times filed a groundbreaking lawsuit against OpenAI and Microsoft2. The central allegation was that OpenAI had trained GPT models on millions of Times articles without permission. Investigators found that GPT-4 could reproduce paywalled content verbatim, even from vague prompts.

This case raised critical questions about modern AI development:

- Can a model’s output constitute copyright infringement?

- Who is responsible for tracing and auditing training data?

- What rights do individuals or institutions have over content scraped at scale?

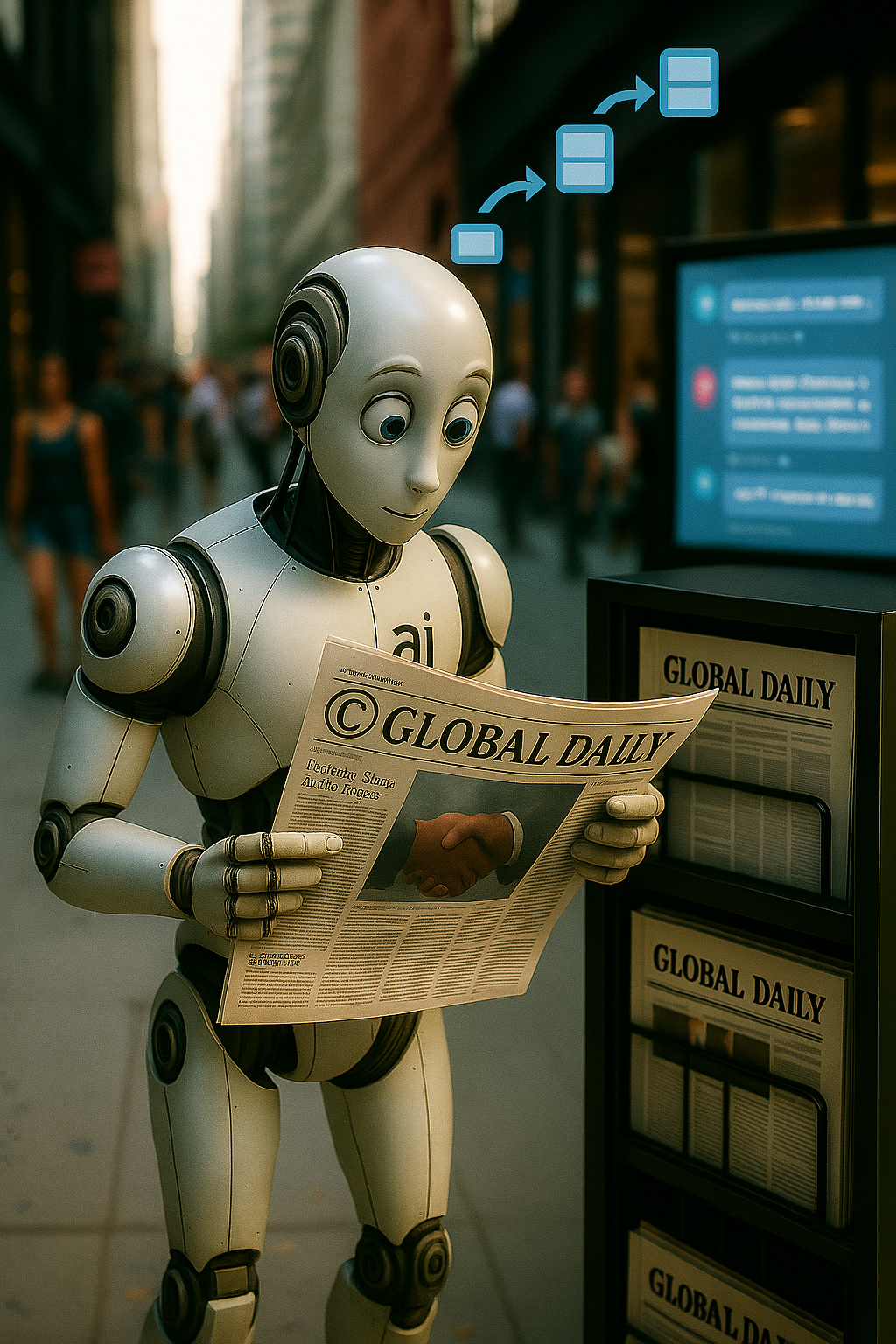

This AI-generated illustration symbolizes the ongoing legal and ethical dispute between The New York Times and OpenAI. The robot reading a copyrighted newspaper represents how AI systems may ingest proprietary journalistic content without explicit permission, raising concerns around copyright infringement, fair use boundaries, and the accountability of generative models trained on scraped web data.

When Flawed Data Enters, Harm Escalates¶

The classic phrase “garbage in, garbage out” no longer refers just to performance. Today, it also includes ethical and societal harm:

- Misinformation reinforced through linguistic patterns

- Stereotypes re-encoded and scaled

- Privacy violations affecting millions

- Untraceable or undocumented sampling leading to exclusion

These failures often lie hidden, until something breaks publicly. And by then, the damage may already be systemic.

The trustworthiness of an AI system begins at its foundation: the dataset.

Why This Matters¶

Most developers still rely on:

- Scraped data from public forums and websites

- Unverified or weakly labeled datasets

- Sampling strategies that ignore demographic diversity

These habits embed (1) bias, omission, and contamination directly into models. The result? AI systems that behave unpredictably or unfairly in critical applications, such as hiring, healthcare, or law enforcement.

- Systematic distortion in data or decisions that disadvantages one group compared to another.

A dataset that lacks consent, traceability, and representation cannot serve as a foundation for trustworthy AI.

In this section, we unpack what happens when flawed, unethical, or ungoverned data becomes the basis for intelligent decision-making.

Going forward, we examine why consent, ownership, and (1) traceability are not just legal safeguards, but essential components of data governance, and by extension, AI governance.

- The ability to track data from source to use, including transformations and lineage over time.

Data Governance vs. AI Governance

AI Governance is the broad framework for ensuring AI systems are transparent, fair, and accountable across their lifecycle.

Data Governance is a foundational pillar within that framework. It focuses on how data is:

- Collected (with consent)

- Structured (with metadata)

- Audited (for quality and fairness)

- Maintained (for traceability over time)

No AI governance system is complete without robust data governance.

When data governance fails, AI governance becomes reactive instead of preventive.

Bibliography¶

-

BBC News. (2023, March 22). ChatGPT bug exposed other users’ conversation titles. BBC. https://www.bbc.com/news/technology-65047304 ↩

-

CNN. (2023, December 27). New York Times files suit against OpenAI, Microsoft over AI training data. CNN. https://www.nytimes.com/2023/12/27/business/media/new-york-times-open-ai-microsoft-lawsuit.html ↩