5.1. Can You Trust a Model You Don’t Understand?

Can You Trust a Model You Don’t Understand?¶

When AI systems take on decisions in aviation, healthcare, credit scoring, and beyond:

- Can we trust what we don’t understand?

- Can we rely on decisions we can’t explain?

- Can we prevent harm if we don’t know when or how to intervene?

In these domains, explainability isn’t optional, it’s the foundation of safety, accountability, and human oversight.

Without it, we don’t just risk error. We risk losing control entirely, and when that happens, the cost can be measured in lives.

Case Study 016: Boeing 737 MAX and the Failure of Hidden Automation (Location: Global | Theme: Safety and Explainability)

🧾 Overview

In 2018 and 2019, two Boeing 737 MAX aircraft crashed, killing 346 people. An automated system called MCAS forced the planes' noses down based on faulty sensor data. Pilots were not told MCAS existed, and there were no alerts or overrides.

🚧 Challenges

MCAS relied on a single sensor, provided no warning when it activated, and offered no way for pilots to intervene in time.

💥 Impact

The crashes led to a global grounding of the 737 MAX for 20 months, loss of trust in aviation safety, and billions in costs for Boeing.

🛠️ Action

Boeing redesigned MCAS to use two sensors and added pilot alerts. Regulators updated certification rules for automated systems.

🎯 Results

The case showed the danger of hidden automation and the need for transparency, oversight, and human authority in safety-critical systems.

🧠 Case Study: Boeing 737 MAX and the Failure of Hidden Automation¶

In 2018 and 2019, two Boeing 737 MAX aircraft crashed (Lion Air Flight 610 and Ethiopian Airlines Flight 302), killing a total of 346 people. Investigations revealed that an automated system called MCAS (Maneuvering Characteristics Augmentation System) had repeatedly forced the planes’ noses downward, based on a single faulty sensor.

The pilots had no knowledge that MCAS even existed. There were no alerts, no override interface, and no explanation of why the aircraft was behaving the way it was.

🗨️ "I don't know what the plane is doing."

- Final words from the cockpit recording, Ethiopian Airlines Flight 302

[Source: U.S. National Transportation Safety Board (NTSB), 2020 Final Report]

This wasn’t just a failure of flight control, it was a breakdown in system explainability.

What will happen when AI systems act without explanation?¶

As AI systems take on more decisions, from LLM-powered tools to recommendation engines, autonomous vehicles, and credit scoring models, we should ask:

- What happens when AI automation overrides us without reason?

- What happens when we can’t see why it acts, or stop it in time?

When AI acts automatically, without offering reasons, we don’t just lose trust. We lose the ability to respond before something goes wrong.

The Design Lesson for AI Models¶

While MCAS was not a neural network or deep learning model, it followed a behavioral pattern identical to modern opaque AI systems:

- Decisions made automatically

- No explanations given

- Humans unable to detect, question, or override the system

The lesson here isn’t about airplanes. It’s about designing systems that give humans meaningful insight and authority, before and during high-stakes decisions.

This is why explainability is defined as a core risk control in ISO/IEC 23894:2023, which requires developers to identify explainability gaps as part of AI lifecycle risk assessments[^1].

Likewise, ISO/IEC 24028:2020 places explainability alongside safety and robustness as foundational properties of trustworthy AI**[^2]. Without it, there is no way to detect misuse, audit failure, or take corrective action in time.

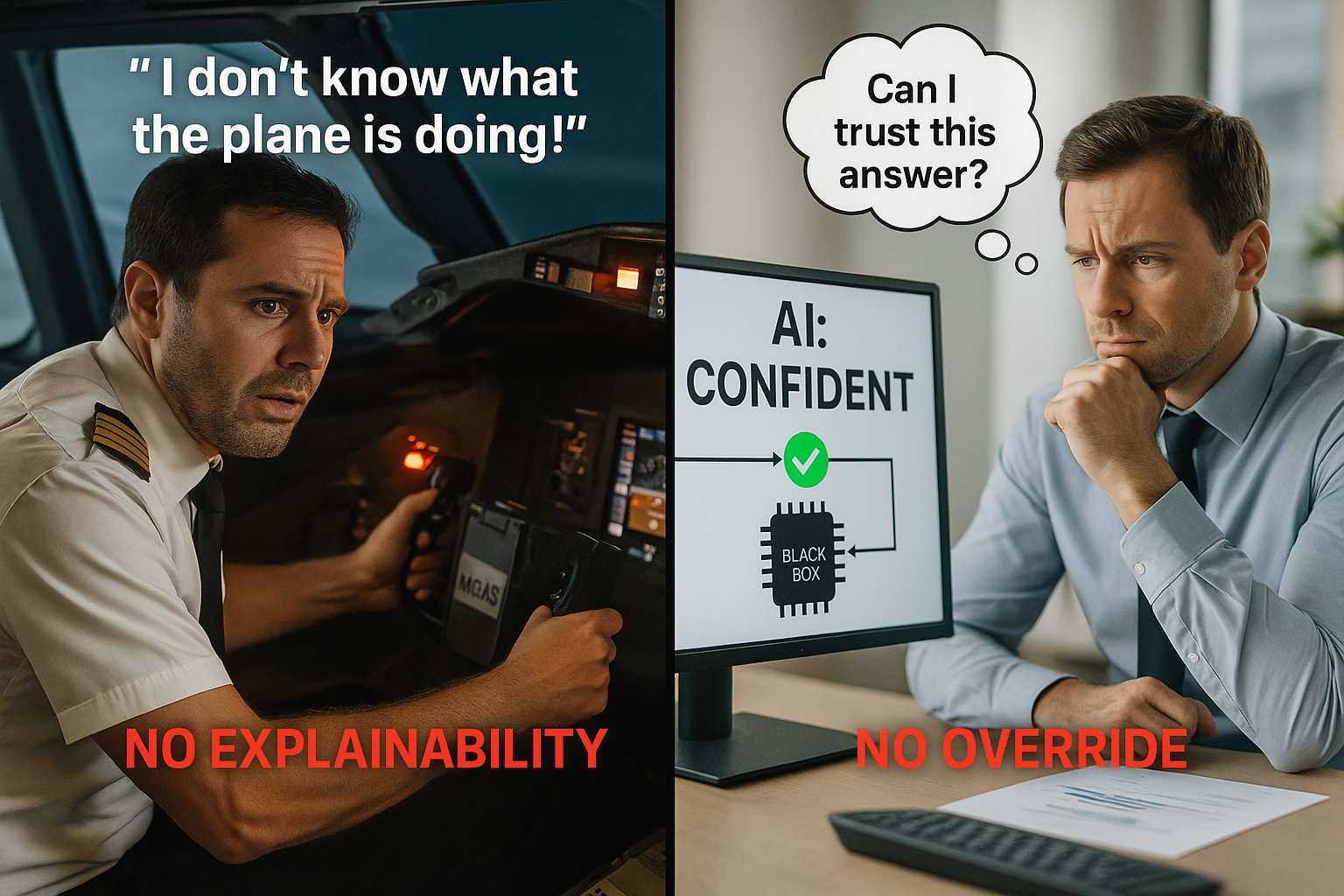

This AI-generated illustration compares two scenarios: a pilot unable to understand or override the MCAS system during the Boeing 737 MAX crashes, and a user questioning a confident but opaque AI decision. Both highlight the risks of hidden automation, lack of explainability, and absence of human control.

ThinkAnchor

“If you cannot explain it, you cannot control it.”

Cynthia Rudin, Professor of Computer Science, Duke University. Cynthia Rudin argues that in high-stakes decisions, we need interpretable models, because post-hoc explanations often fail to reveal true reasoning.

[Source: Rudin, C. (2019). Stop explaining black box machine learning models for high stakes decisions. Nature Machine Intelligence.]

This pattern of silent, irreversible, and unexplainable decision-making is no longer unique to flight systems. Modern AI models, especially large-scale neural networks, function as black boxes: they make predictions, but offer no insight into how those predictions were formed.

Users see outputs, but not reasoning. Developers monitor performance, but cannot always trace causality.

Delegation and oversight are not the same, but they are deeply intertwined. Delegation fails when we hand over decisions without safeguards; oversight fails when those safeguards exist only on paper. Together, they define how control over AI systems can quietly erode.

The sections that follow explore how this problem unfolds during delegation (5.1.1) and failed oversight (5.1.2), what explainability really means in modern AI models, how to achieve it technically, and why delegating decisions without oversight is a choice we can no longer afford.