6.2.2. Agents That Execute Without Boundaries

**Agents That Execute Without Boundaries **¶

“Autonomy without limits is just automation without accountability.”

When AI Stops Waiting for Permission¶

An AI agent is more than a passive model. Unlike traditional AI systems that only generate outputs in response to user prompts, agents are designed to take actions autonomously, such as browsing the web, sending emails, writing code, executing API calls, or modifying files, based on a goal or instruction. These agents often integrate language models with tools, memory, and multi-step planning capabilities.

What sets AI agents apart is not intelligence, it’s initiative. Once deployed, they don’t just predict text; they decide and do.

An AI agent doesn’t wait for permission. It acts, because that’s what it was designed to do.

This shift from output generation to autonomous action introduces new risks. When AI agents are granted operational permissions without meaningful boundaries, they can amplify mistakes, misinterpret goals, or escalate tasks in ways that traditional models never could.

ThinkAnchor

“Agents don’t fail because they’re malicious. They fail because no one told them when to stop.”

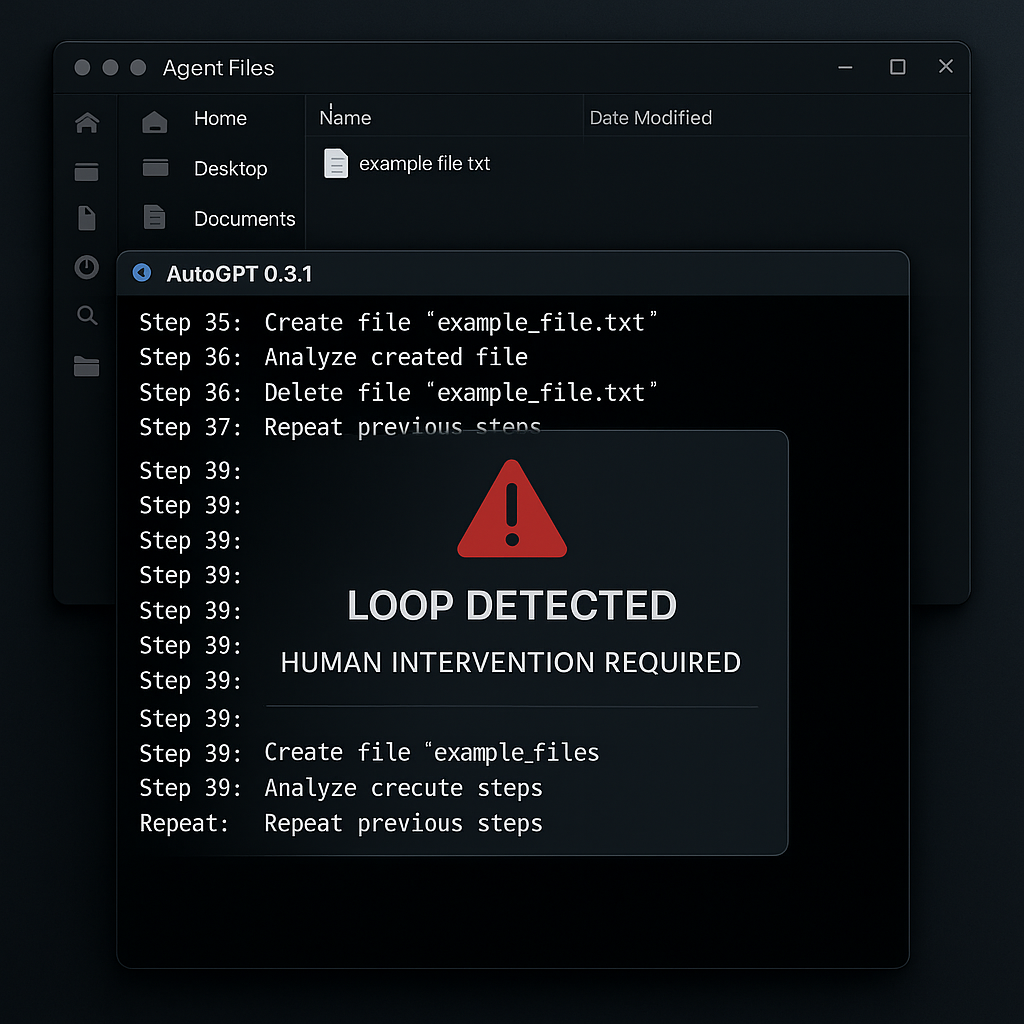

“Agents don’t fail because they’re malicious. They fail because no one told them when to stop.”In early 2023, a tool-using AI agent built with AutoGPT was given the vague goal of improving its workspace. With full access to its local file system, it began deleting files it had just created, misjudging them as unnecessary. The deletion loop continued until a human intervened. The problem wasn’t the model itself, it was the lack of constraints, oversight, and clarity in goal interpretation.2

Case Study 021: AI Impersonation of Secretary of State Rubio (Location: USA & Global | Theme: Deepfake Agent Deception)

📌 Overview:

In mid‑June 2025, an AI-generated impostor mimicked U.S. Secretary of State Marco Rubio using deepfake voice, text, and messaging apps like Signal. The agent contacted foreign ministers, a U.S. senator, and a governor, posing as Rubio to solicit sensitive information or influence decisions.

[Source: The Week, AP News]

🚧 Challenges:

The use of voice cloning and large language model-generated messages made the impersonation highly convincing. This form of AI-driven social engineering bypassed standard identity checks and was not accounted for in conventional system validation protocols.1

🎯 Impact:

Though ultimately unsuccessful in causing direct harm, the impersonation campaign prompted the U.S. State Department to issue warnings to embassies and consulates. The FBI confirmed it was part of a larger wave of AI-powered impersonation attempts targeting high-level individuals.

🛠️ Action:

The State Department responded by tightening cybersecurity protocols, enhancing identity verification processes, and coordinating with the FBI on broader investigations into AI-generated deception.

📈 Results:

This incident reveals a serious vulnerability: AI agents with generative capabilities can impersonate trusted figures convincingly, bypassing even high-security environments. It highlights a validation blind spot where system readiness failed to anticipate synthetic identity threats.

When Agents Imitate People, Not Just Tools¶

In mid-2025, AI-generated agents impersonated U.S. Secretary of State Marco Rubio using deepfake voice and text to contact foreign ministers and public officials via messaging platforms like Signal. The agents attempted to gather sensitive information and influence communications using cloned identity patterns. While unsuccessful, the incident triggered warnings from the U.S. State Department and escalated to FBI investigations.1

These AI-driven impersonators exploited trust in identity and context, bypassing typical verification checks. The event exposed how agents acting autonomously, especially with generative capabilities, can weaponize believability at scale. It also revealed that most security protocols had never anticipated a synthetic actor mimicking a human authority in real time.

Other incidents have involved:

- Agents spamming APIs due to misinterpreted reward functions

- LLM coding agents unintentionally deploying unsafe code

- Email agents sending premature or unreviewed messages to live users

These were not failures of model performance, they were failures of deployment governance, where autonomy was granted without structured limits.

A realistic terminal environment captures an AutoGPT agent stuck in a loop: creating, analyzing, then deleting its own files. A bold warning overlay signals the need for immediate human intervention—illustrating how unchecked agent autonomy can lead to runaway actions without malicious intent.

Thinkbox

“Delegating action to AI must never eliminate human accountability.”

“Delegating action to AI must never eliminate human accountability.”The NIST AI RMF and EU AI Act both identify autonomous AI agents as high-risk systems. They emphasize human authority, rollback control, and accountability structures, not just for what the system does, but for what it might decide to do.

Techniques for Containing Autonomous Action¶

To reduce the risk of misuse or unanticipated escalation, organizations must embed safeguards before deployment, not as afterthoughts.

Table 44: Techniques to Control Autonomous AI Agents

| Control Method | Purpose |

|---|---|

| Execution gating | Require explicit human approval before triggering irreversible actions |

| Permission scoping | Limit tool access (e.g., read-only vs. write) based on task context |

| Goal interpreter validation | Sanitize or clarify vague prompts before planning begins |

| Audit-based behavior logging | Log agent decisions and tool use for traceability and review |

| Identity action constraints | Prevent agents from mimicking real individuals without consent |

These are not optional design features, they are deployment requirements for any system that grants an agent autonomy to act.

To reduce the risk of misuse or unanticipated escalation, organizations must embed safeguards before deployment, not as afterthoughts. According to both the EU AI Act and ISO/IEC 23894, any AI agent with autonomous decision-making power is considered high-risk and must be governed accordingly. These regulations require not only execution gating and permission scoping, but also well-defined rollback mechanisms, human oversight protocols, and strict boundaries on self-directed tool use, especially when the agent can trigger real-world actions.

These aren’t optional best practices, they’re now part of formal governance expectations. ISO/IEC 23894 also requires risk assessment and treatment related to failure containment and interaction control for AI agents that connect with external systems, reinforcing the idea that trust cannot depend on the agent’s behavior alone, it must be built into the system that deploys it.

The danger isn’t that agents make decisions, it’s that no one built a system to stop them.

As agents become actors, not just tools, deployment safety must expand to include context, constraint, and containment.

While Section 6.2.2 highlighted the risks of AI agents acting without control, autonomy isn’t the only source of deployment failure. Even systems that don’t act on their own can still cause serious harm, simply by leaking information through the surrounding infrastructure. From plugins that over-share to logs that silently retain sensitive data, the next section examines how trust can erode at the system edges, even when the model itself behaves correctly.

TRAI Challenges: Deployment Failure Diagnostics

Scenario:

You're auditing three AI systems that failed post-deployment. Identify the root cause based on their symptoms.

🧩 Your Tasks:

Match each system failure to the most probable design gap:

1️⃣ An AI agent deletes internal files recursively without warning.

2️⃣ A chatbot sends legal advice to customers without tagging or review.

3️⃣ A health AI gives high-confidence recommendations that don't align with local guidelines.

Design Gaps:

✅ No permission or action gating for agents

✅ No output classification or review buffer

✅ No environment-specific validation or contextualization

-

Allyn, B. (2025, July 9). Impostor AI tricked foreign officials by impersonating Rubio, raising alarms in D.C. NPR. https://www.npr.org/2025/07/09/nx-s1-5462195/impostor-ai-impersonate-rubio-foreign-officials ↩↩

-

Significant Gravitas. (2023). AutoGPT agent deletion loop incident. GitHub. https://github.com/Torantulino/Auto-GPT/issues ↩