6.2.3. Privacy Failures in Interfaces, Logs, and Plugins

**Privacy Failures in Interfaces, Logs, and Plugins **¶

“It’s not always the model that leaks, it’s everything around it.”

Trust Breaches Don’t Always Look Like Breaches¶

In the previous section, we explored what happens when AI agents act too freely. But even when systems don’t act on their own, they can still cause harm, not by what they do, but by what they expose.

When most people think of privacy failures in AI, they imagine obvious breaches: a chatbot revealing training data, or a model repeating sensitive information. But increasingly, the real risk comes from the invisible infrastructure, interfaces, third-party plugins, log files, and integrations, that surround the model and silently record, transmit, or repurpose user data.

These aren’t accidents. They’re often features designed without privacy by default.

Privacy doesn’t just fail in what the model says. It fails in where the data goes after the model answers.

When Logging and Plugins Become the Weakest Link¶

Case: Samsung Internal Code Leak via ChatGPT (2023)

Samsung engineers used ChatGPT to debug proprietary code. The prompts, containing sensitive internal logic, were logged by OpenAI’s standard system, which retained inputs for model improvement. Because Samsung had not disabled logging or configured a privacy-safe inference mode, the data was stored outside their control. The model worked as intended, but the system quietly compromised proprietary information.4

Other real-world examples include:

- Plugins summarizing Zoom meetings without consent

- Interfaces that auto-log all user inputs, including identifiable or sensitive content

- Browser extensions that expose session tokens or credentials through side-channel leakage

These failures often go undetected, until trust is already broken.

Thinkbox

“If you can’t explain what data you’re storing, you shouldn’t be storing it.”

“If you can’t explain what data you’re storing, you shouldn’t be storing it.”This warning from the European Data Protection Board (EDPB) was issued in response to growing misuse of AI logs and plugins that lack transparency. The EU AI Act and GDPR jointly require that systems demonstrate purpose limitation and access minimization, or risk legal enforcement.

The Real Problem: Privacy That Fails Quietly¶

Unlike model hallucinations or agent misbehavior, privacy loss doesn’t announce itself. It seeps through logs, plugin chains, and integrations that were never designed with containment in mind.

Standards like ISO/IEC 27559 and GDPR Articles 5 and 25 explicitly require systems to demonstrate data minimization, purpose limitation, and user-aware design12. Yet many AI deployments still retain prompts by default, chain user data across tools, and store logs indefinitely, practices that violate both technical expectations and legal obligations.

The EU AI Act3 goes even further, classifying opaque logging and plugin misuse as violations of transparency and control. In short, if your system can’t explain what data was collected, why, and where it went, it’s already out of compliance.

🎯 The model doesn’t have to say anything wrong, if the system remembers too much, that’s enough to break privacy law.

How to Seal the Cracks Before They Spread¶

Protecting user trust requires more than redacting outputs. It requires protecting the entire environment around your model, from first input to final storage.

Table 45: Techniques for Privacy-Aware AI Deployment

| Technique | Purpose | Real-World Tool / Platform |

|---|---|---|

| Prompt Redaction & Truncation | Remove or mask sensitive data before inference | Microsoft Presidio, OpenAI Moderation API |

| Zero-Data Inference Modes | Prevent storage of user input/output after inference | Azure OpenAI Private Deployment, ChatGPT Team Settings |

| Plugin Boundary Validation | Limit data exposure to third-party integrations | OpenAI Plugin Security, Zapier Platform Controls |

| Anonymization Pipelines | Remove or replace identifiable data during storage | Google Cloud DLP, Microsoft Presidio |

🔍 Tool in Focus: Azure OpenAI Private Deployment¶

💡 What It Is¶

Azure OpenAI's Private Deployment allows organizations to run GPT models within their own Azure tenant, ensuring data privacy, zero prompt retention, and enterprise-grade control over all inference activity.

🧰 How It Works¶

You invoke GPT models using Azure-hosted API endpoints, but unlike public access: - All data traffic is contained within your private virtual network (VNet) - Prompt and response data are never logged - Inputs are not used to retrain or monitor models - You can enforce strict access policies via Azure Active Directory (AAD)

🔐 Key Features¶

- Zero-data retention for all prompts and completions

- RBAC (Role-Based Access Control) via Azure AD

- Private networking via VNets and firewalls

- Compatible with your existing compliance and audit tools

📌 Use Cases¶

- Hospitals running AI-based summarization on patient records

- Legal teams drafting internal reports using proprietary data

- Financial institutions running risk assessments on transaction logs

For more details: Azure OpenAI Data Privacy

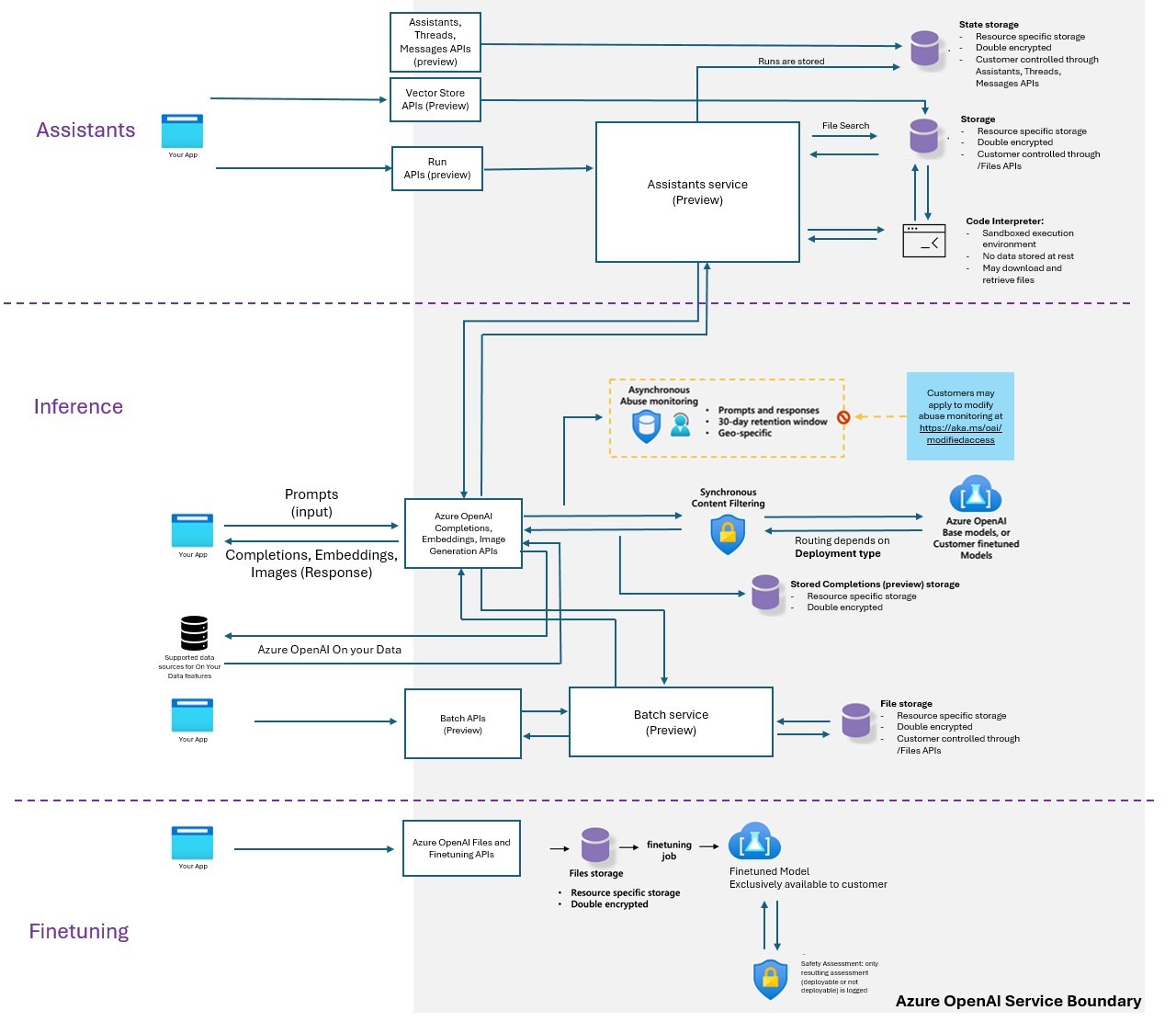

This official diagram from Microsoft illustrates how inputs, completions, and storage are routed in an Azure-hosted LLM deployment. It highlights the importance of secured storage, abuse monitoring, and deployment-specific retention—reinforcing that trustworthy deployment depends not just on models, but on how surrounding systems handle sensitive data. Source:

These controls are not just good practice, they are compliance mechanisms required under leading global frameworks. And yet, they’re often ignored because the model itself “passed” privacy testing.

But privacy-aware deployment means protecting more than just the model. It means building a system that remembers only what it must, and forgets everything else.

-

ISO/IEC. (2021). ISO/IEC 27559: Privacy enhancing data de-identification framework. International Organization for Standardization. https://www.iso.org/standard/80311.html ↩

-

European Union. (2016). GDPR, Articles 5 & 25. https://eur-lex.europa.eu/eli/reg/2016/679/oj ↩

-

European Union. (2024). EU Artificial Intelligence Act – Final Text. https://artificialintelligenceact.eu/the-act/ ↩

-

Ray, S. (2023, May 2). Samsung bans ChatGPT and other chatbots for employees after sensitive code leak. Forbes. https://www.forbes.com/sites/siladityaray/2023/05/02/samsung-bans-chatgpt-and-other-chatbots-for-employees-after-sensitive-code-leak/ ↩