7.2. Can Humans See Enough to Intervene?

Can Humans See Enough to Intervene?¶

“Oversight is meaningless if it’s buried in noise, filtered by design, or never reaches the right person.”

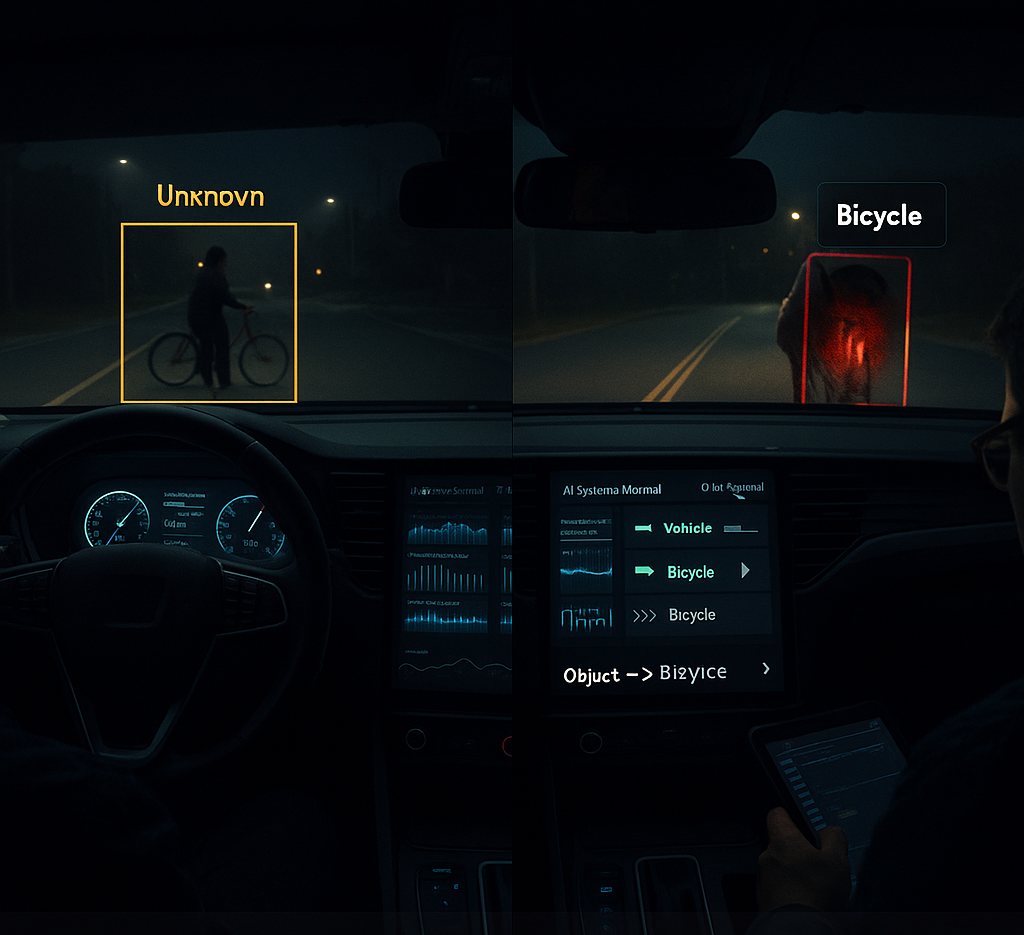

In March 2018, an Uber self-driving test vehicle in Tempe, Arizona detected a pedestrian approximately six seconds before impact, registering the object as "unknown" and then "vehicle" before ultimately labeling it "bicycle" just before the crash. Despite having internal confidence scores that could have triggered a stop, no alert was communicated to the human safety operator in time1.

This AI-generated figure simulates the 2018 Uber self-driving crash in Tempe, Arizona, where the system initially labeled a pedestrian as “Unknown” and only recognized it as a “Bicycle” moments before impact—without triggering any human alert. The interface reflects a governance failure: detection occurred, but escalation did not. Inspired by: WIRED, 2018.

The operator, seated behind the wheel, was monitoring a center-console interface. There were no real-time warnings, no urgent visual cues**, and no intervention prompt. The system relied on the human to intervene, but the interface **never made the danger legible or actionable.

What good is oversight if the reviewer can’t see the risk, or is falsely assured that everything is fine?

This wasn’t just a failure of perception. It was a failure of interface-level governance, where the right data existed but was presented in the wrong way, at the wrong moment, to the wrong person.

In Section 7.1, we saw that assigning authority is essential, but insufficient. Now we turn to the next question:

Can oversight succeed if humans are shown the wrong signals, or none at all?

This section examines the human interface of oversight: not just whether data is exposed, but whether it is interpretable, actionable, and timely.

We explore:

- How dashboards, logs, and summaries shape (or distort) reviewer judgment

- Which tools actually help humans intervene, and which bury risk in noise

- Why co-design with real-world users like safety operators and clinicians is critical to meaningful oversight

Because assigning someone the power to intervene isn’t enough. They must be able to see the risk, and know what to do about it.

Bibliography¶

-

Madrigal, A. C. (2018, December 6). How the self-driving car industry designs the human out of the loop. Wired. https://www.wired.com/story/uber-crash-arizona-human-train-self-driving-cars/ ↩